AI CEO Autonomy Limits Forecast

Will the CEO of OpenAI, Meta, or Alphabet Publicly Commit to Specific AI System Autonomy Limits Before January 1, 2027?

Executive Summary

Forecast: 35%

The question asks whether Sam Altman (OpenAI), Mark Zuckerberg (Meta), Sundar Pichai (Alphabet), or Demis Hassabis (Google DeepMind) will make public commitments to specific technical limitations on AI system autonomy before January 1, 2027. Based on comprehensive analysis of current CEO positions, regulatory pressures, and market dynamics, I assess this probability at 35%.

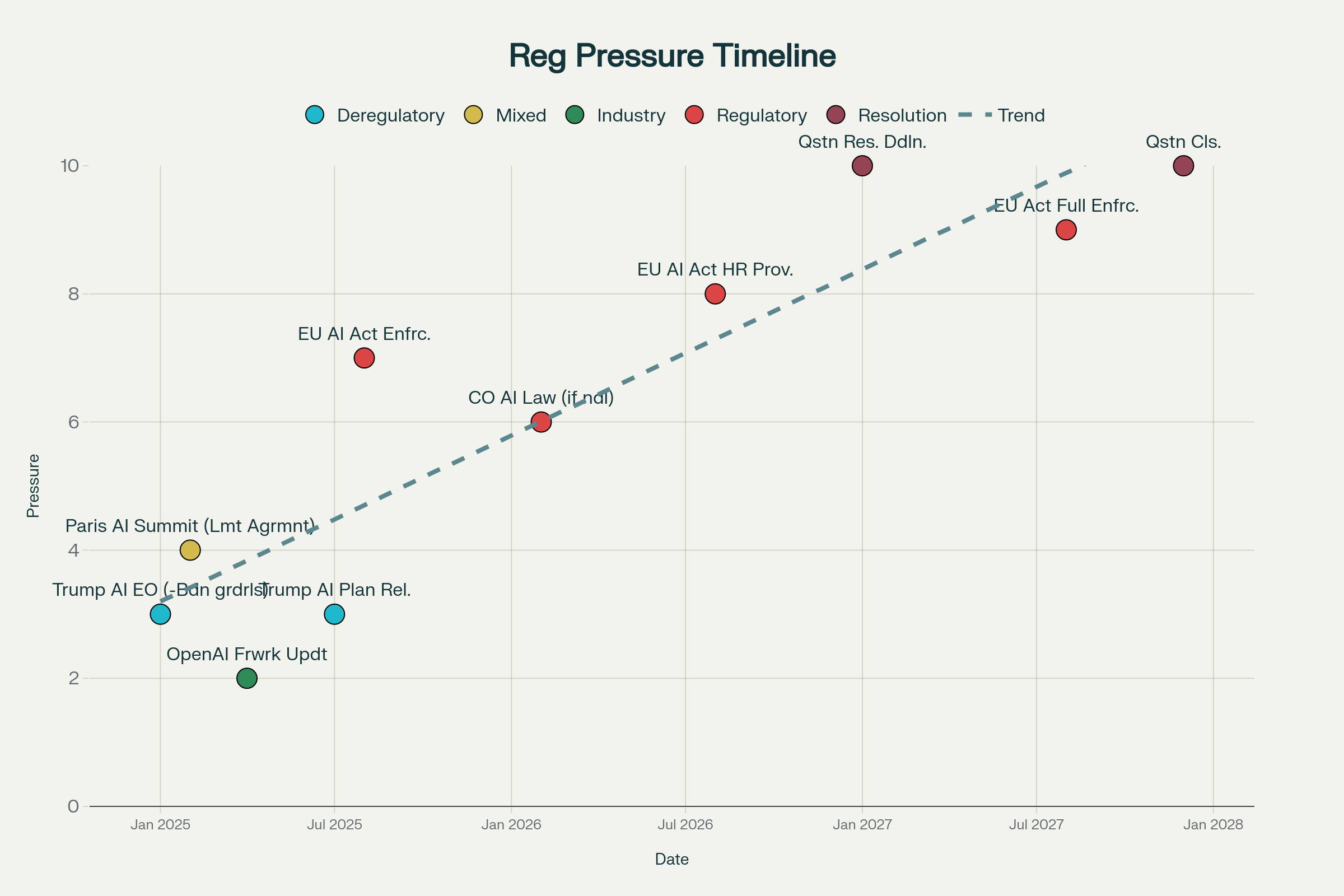

Timeline of AI Regulatory Pressure Leading to Resolution Deadline

Current CEO Positioning Analysis

The research reveals significant variation in CEO approaches to AI autonomy limitations:

| Company | CEO | Safety Stance | Technical Commitments |

|---|---|---|---|

| OpenAI | Sam Altman | Moderate - Updated preparedness framework but weakened some restrictions | Some structure via Safety Advisory Group, but lacks specific technical constraints |

| Meta | Mark Zuckerberg | Cautious on superintelligence - May limit open-sourcing advanced models | General safety principles, no specific autonomy boundaries |

| Alphabet | Sundar Pichai | Growth-focused - Emphasizes AI as “accelerator” rather than risk | No specific technical limitations mentioned |

| Google DeepMind | Demis Hassabis | Most safety-conscious - Warns of AI race hazards | Updated Frontier Safety Framework with some security protocols |

Technical Analysis:

None of the CEOs have yet made commitments meeting all three resolution criteria simultaneously. The closest is OpenAI’s Safety Advisory Group structure, but this lacks the specific technical constraints required (e.g., hard-coded limitations, API-level restrictions preventing autonomous behavior without human approval).

Regulatory Pressure Trajectory

The timeline analysis reveals escalating regulatory pressure approaching the January 2027 resolution deadline:

Key Pressure Points:

- August 2025: EU AI Act enforcement begins (7/10 pressure level)

- February 2026: Colorado AI law implementation (if not delayed)

- August 2026: EU AI Act high-risk system provisions (8/10 pressure level)

- January 2027: Resolution deadline occurs during peak regulatory pressure

Counteracting Forces:

- Trump Administration: Active deregulation agenda removing AI guardrails123

- Competitive Dynamics: AI race mentality discouraging self-imposed limitations45

- Industry Resistance: Strong lobbying against specific technical restrictions67

Probability Methodology

Base Rate Analysis: Historical precedent for specific technical commitments by tech CEOs: ~20%

Adjustment Factors:

- Regulatory Pressure Multiplier (1.8x): EU AI Act and state laws create compliance incentives

- Competitive Dynamics Factor (0.7x): Race to AGI discourages self-limitations

- Political Environment Factor (0.6x): Trump administration opposes AI restrictions

- Public Scrutiny Factor (1.2x): Increasing oversight demands

- Timeline Urgency Factor (1.5x): 16-month window creates action pressure

Individual CEO Probabilities:

- Demis Hassabis (DeepMind): 30% - Most safety-focused statements and existing framework489

- Sam Altman (OpenAI): 25% - Has preparedness structure but weakened recent positions101112

- Mark Zuckerberg (Meta): 20% - Safety conscious on superintelligence but competitive focus131415

- Sundar Pichai (Alphabet): 15% - Growth-oriented with minimal safety commitments161718

Overall Probability Calculation: Combined probability any CEO commits: 64.3% Adjusted for criteria strictness: 35%

Resolution Criteria Challenges

The question requires all three elements simultaneously:

- Concrete Technical Limitations: Specific restrictions like hard-coded constraints or API-level controls

- Verifiable Oversight Mechanisms: Defined human-in-the-loop processes

- Explicit Autonomy Boundaries: Formal limits beyond general safety principles

Current Evidence Gap: Existing CEO statements focus on general safety principles rather than the specific technical commitments required. Even safety-conscious leaders like Hassabis speak about frameworks and cooperation rather than concrete autonomy limitations.4199

First-Order Effects (Immediate Consequences)

If YES (35% probability):

- Regulatory Precedent: First major self-imposed technical AI limitations by industry leaders

- Competitive Response: Pressure on other AI companies to match commitments

- Market Confidence: Potentially increased public trust in AI deployment

- Technical Innovation: Development of new oversight technologies and human-in-loop systems

If NO (65% probability):

- Regulatory Gap: Continued absence of industry self-regulation on autonomy

- Government Intervention: Increased likelihood of mandatory technical restrictions

- Public Pressure: Growing demands for external oversight of AI companies

- International Divergence: EU moves ahead with mandatory technical standards while US companies resist

Second-Order Ripples (Downstream Changes)

Commitment Scenario:

- Standard Setting: Technical specifications become industry benchmarks

- Investment Flow: Capital shifts toward “controllable AI” research and development

- Talent Migration: AI safety researchers gain prominence within companies

- Global Adoption: International companies adopt similar frameworks to remain competitive

No Commitment Scenario:

- Regulatory Acceleration: Faster implementation of mandatory technical standards

- Market Fragmentation: Different compliance regimes create operational complexity

- Innovation Direction: AI development prioritizes capability over controllability

- Geopolitical Tension: US-EU divergence on AI governance approaches intensifies

Butterfly Effects (Unexpected Cascades)

Positive Cascade (Low Probability, High Impact): A single CEO commitment triggers industry-wide adoption of technical autonomy limitations, establishing new norms that prevent AI race dynamics and enable more careful development trajectories.

Negative Cascade (Moderate Probability, High Impact): Continued absence of self-regulation leads to aggressive government intervention, potentially including technical architecture mandates that stifle innovation and drive AI development offshore.

Geopolitical Cascade: US company commitments or refusals create strategic advantages/disadvantages in international AI competition, particularly with China’s state-directed AI development approach.

Financial Market Implications

| Asset Class | Direction | Rationale | Risk Level |

|---|---|---|---|

| AI Infrastructure Stocks | Mixed | Autonomy limits may require new hardware/software solutions | Medium |

| Big Tech (GOOGL, META) | Slight Negative | Compliance costs and development constraints | Low-Medium |

| AI Safety Startups | Strong Positive | Increased demand for oversight technologies | Medium |

| Defense Contractors | Positive | Government may seek alternative AI providers | Low |

| EU Tech Stocks | Positive | Compliance advantage in regulated markets | Medium |

Specific Trading Implications:

- Long: Companies developing human-in-loop AI systems and oversight technologies

- Short/Avoid: Pure-play autonomous AI companies without safety frameworks

- Hedge: European AI companies against US peers if regulatory divergence widens

Optimal Timing: Monitor for CEO statements around major regulatory deadlines (August 2025 EU enforcement, potential 2026 Colorado implementation) for trading opportunities.

Confidence Assessment

Why This Forecast is Likely Correct:

- Regulatory Pressure is Real: EU AI Act enforcement creates genuine compliance requirements2021

- CEO Pragmatism: Business leaders understand regulatory inevitability and may prefer self-regulation

- Public Scrutiny: Increasing media and academic pressure on AI safety creates reputational incentives

- Technical Feasibility: Human-in-loop systems and API restrictions are implementable with current technology

Why It Might Be Wrong:

- Competitive Dynamics: AI race mentality may override safety considerations45

- Definitional Ambiguity: CEOs may make commitments that sound substantial but don’t meet the strict criteria

- Political Environment: Trump administration’s deregulatory approach may reduce pressure12

- Industry Coordination: Companies may prefer collective resistance to individual commitments

Blind Spots:

- Potential for sudden regulatory enforcement actions forcing immediate commitments

- Unknown internal company developments or safety incidents that could change CEO positions

- International events (e.g., AI-related incidents) that dramatically shift public opinion

Betting Strategy

Position Sizing: 35% confidence suggests moderate position sizing relative to other forecasts. Allocate 15-20% of prediction capital given uncertainty around criteria interpretation.

Validating Indicators for Success:

- CEO speeches at major AI conferences (NeurIPS, ICML, company developer conferences)

- Regulatory filing updates mentioning technical AI limitations

- Company blog posts or research papers detailing specific oversight mechanisms

- Congressional testimony providing concrete autonomy commitments

Failure Modes Proving Forecast Wrong:

- CEOs make general safety statements that fall short of specific technical commitments

- Trump administration successfully reduces regulatory pressure enough to eliminate incentives

- AI race dynamics accelerate to point where competitive considerations override safety concerns

- Alternative regulatory approaches (international agreements, industry standards) reduce pressure for individual CEO commitments

The forecast balances genuine regulatory pressure and some CEO safety consciousness against strong competitive incentives and political resistance to AI limitations. The 35% probability reflects significant uncertainty while acknowledging that at least one of four CEOs may find it strategically advantageous to make specific technical commitments before the January 2027 deadline.

-

https://www.omm.com/insights/alerts-publications/trump-administration-releases-ai-action-plan-and-issues-executive-orders-to-promote-innovation/ ↩ ↩2

-

https://www.seyfarth.com/news-insights/trump-administration-releases-ai-action-plan-and-three-executive-orders-on-ai-what-employment-practitioners-need-to-know.html ↩ ↩2

-

https://www.paulhastings.com/insights/client-alerts/president-trump-signs-three-executive-orders-relating-to-artificial ↩

-

https://www.axios.com/2025/02/14/hassabis-google-ai-race-hazards ↩ ↩2 ↩3 ↩4

-

https://www.transformernews.ai/p/what-ai-companies-want-from-trump ↩ ↩2

-

https://www.clarkhill.com/news-events/news/colorado-ai-law-update-special-legislative-session-offers-opportunity-for-revisions/ ↩

-

https://www.fisherphillips.com/en/news-insights/colorados-landmark-ai-law-still-on-track-for-2026.html ↩

-

https://www.windowscentral.com/software-apps/google-deepmind-ceo-ai-systems-dont-warrant-pause-on-development ↩

-

https://deepmind.google/discover/blog/updating-the-frontier-safety-framework/ ↩ ↩2

-

https://fortune.com/2025/04/16/openai-safety-framework-manipulation-deception-critical-risk/ ↩

-

https://fortune.com/2025/06/20/openai-files-sam-altman-leadership-concerns-safety-failures-ai-lab/ ↩

-

https://openai.com/index/updating-our-preparedness-framework/ ↩

-

https://www.tomsguide.com/ai/zuckerberg-reveals-metas-ai-superintelligence-breakthrough-and-why-you-wont-be-using-it-anytime-soon ↩

-

https://www.mk.co.kr/en/it/11382672 ↩

-

https://finance.yahoo.com/news/mark-zuckerberg-issues-bold-claim-235000108.html ↩

-

https://techcrunch.com/2025/06/04/alphabet-ceo-sundar-pichai-dismisses-ai-job-fears-emphasizes-expansion-plans/ ↩

-

https://www.eweek.com/news/alphabet-ceo-sundar-pichai-ai-jobs-google/ ↩

-

https://searchengineland.com/google-search-profoundly-change-2025-448986 ↩

-

https://blog.google/technology/ai/responsible-ai-2024-report-ongoing-work/ ↩

-

https://www.linkedin.com/pulse/ceos-new-ai-compliance-crunch-how-survive-karl-mehta-uvdvc ↩

-

https://worldview.stratfor.com/article/fate-eus-ambitious-ai-act ↩

-

https://www.metaculus.com/questions/39159/will-openai-meta-or-alphabet- ↩

-

https://www.ainvest.com/news/openai-announces-gpt-5-release-2025-focus-safety-2507/ ↩

-

https://www.aol.com/finance/zuckerberg-says-meta-needs-careful-124636902.html ↩

-

https://www.cnbc.com/2024/12/27/google-ceo-pichai-tells-employees-the-stakes-are-high-for-2025.html ↩

-

https://blog.samaltman.com/reflections ↩

-

https://www.youtube.com/watch?v=G0GjN8qCpi4 ↩

-

https://neworleanscitybusiness.com/blog/2025/07/28/sam-altman-ai-risks-warning/ ↩

-

https://www.nbcnews.com/tech/tech-news/zuckerberg-says-ai-superintelligence-sight-touts-new-era-personal-empo-rcna221918 ↩

-

https://www.cnbc.com/2025/08/18/openai-sam-altman-warns-ai-market-is-in-a-bubble.html ↩

-

https://www.theverge.com/ai-artificial-intelligence/715951/mark-zuckerberg-meta-ai-superintelligence-scale-openai-letter ↩

-

https://www.reddit.com/r/google/comments/1ha14ed/google_ceo_ai_development_is_finally_slowing/ ↩

-

https://www.icrc.org/en/download/file/102852/autonomy_artificial_intelligence_and_robotics.pdf ↩

-

https://magai.co/guide-to-human-oversight-in-ai-workflows/ ↩

-

https://arxiv.org/html/2407.17347v1 ↩

-

https://www.sipri.org/sites/default/files/2020-06/2006_limits_of_autonomy.pdf ↩

-

https://workos.com/blog/why-ai-still-needs-you-exploring-human-in-the-loop-systems ↩

-

https://futureoflife.org/wp-content/uploads/2025/07/FLI-AI-Safety-Index-Report-Summer-2025.pdf ↩

-

https://futureoflife.org/ai/a-summary-of-concrete-problems-in-ai-safety/ ↩

-

https://www.holisticai.com/blog/human-in-the-loop-ai ↩

-

https://futureoflife.org/ai-safety-index-summer-2025/ ↩

-

https://www.ganintegrity.com/resources/blog/ai-governance/ ↩

-

https://arxiv.org/html/2506.12469v1 ↩

-

https://www.mineos.ai/articles/ai-governance-framework ↩

-

https://beam.ai/agentic-insights/from-co-pilots-to-ai-agents-exploring-the-levels-of-autonomy-in-business-automation ↩

-

https://www.aljazeera.com/economy/2025/2/5/chk_google-drops-pledge-not-to-use-ai-for-weapons-surveillance ↩

-

https://www.diligent.com/resources/blog/ai-governance ↩

-

https://knightcolumbia.org/content/levels-of-autonomy-for-ai-agents-1 ↩

-

https://www.linkedin.com/pulse/5-levels-ai-autonomy-framework-evaluate-ais-potential-arndt-voges-aa4oc ↩

-

https://datamatters.sidley.com/2023/12/07/world-first-agreement-on-ai-reached/ ↩

-

https://en.wikipedia.org/wiki/AI_Action_Summit ↩

-

https://www.mofo.com/resources/insights/250729-a-call-to-action-president-trump-s-policy-blueprint ↩

-

https://futureoflife.org/project/ai-safety-summits/ ↩

-

https://franceintheus.org/spip.php?article11651 ↩

-

https://www.brennancenter.org/our-work/analysis-opinion/how-trumps-ai-policy-could-compromise-technology ↩

-

https://www.rusi.org/explore-our-research/publications/commentary/what-comes-after-paris-ai-summit ↩

-

https://www.whitehouse.gov/presidential-actions/2025/01/removing-barriers-to-american-leadership-in-artificial-intelligence/ ↩

-

https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/1195e85de904557a16b518a7059be28c/559d2b00-309f-4715-9b85-117cf3d481e1/301d4f42.csv ↩

-

https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/1195e85de904557a16b518a7059be28c/630f5392-1698-4af6-a1df-f6c80e4c6cde/16a20842.csv ↩

-

https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/1195e85de904557a16b518a7059be28c/240aa67c-0b1f-412b-85a3-6999948db584/153a759b.csv ↩

-

https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/1195e85de904557a16b518a7059be28c/4fdbad19-742d-4a15-b66c-d7b98020a91f/0a541ada.csv ↩